In an item published recently in the ANU Reporter – Do we have a Frankenstein problem? – ANU lecturer Dr Russell Smith focused on artificial intelligence, and the impact it has in various ways on the lives of everyday people through automated systems. One question which flows from this is whether the law (as it usually does) lags behind technological development; and whether some kind of legal framework is needed.

Dr Smith identifies Mary Shelley’s Frankenstein (or, correctly, the monster created by Dr Frankenstein) as the first artificially created life which became a threat to humans in the realm of literary fiction. The mid-20th century science fiction writer Isaac Asimov (also mentioned by Dr Smith) famously created the three laws of robotics, which were to the effect that robots could not harm humans, until the writing of I, Robot, in which the androids break loose from these constraints.

There are obvious aspects of artificial intelligence (AI) which can harm humans. If the systems in your self-driving car fail, you will likely be injured or killed. But can you be harmed by AI precisely because it is working perfectly? And if there is potential for harm, is legal protection available?

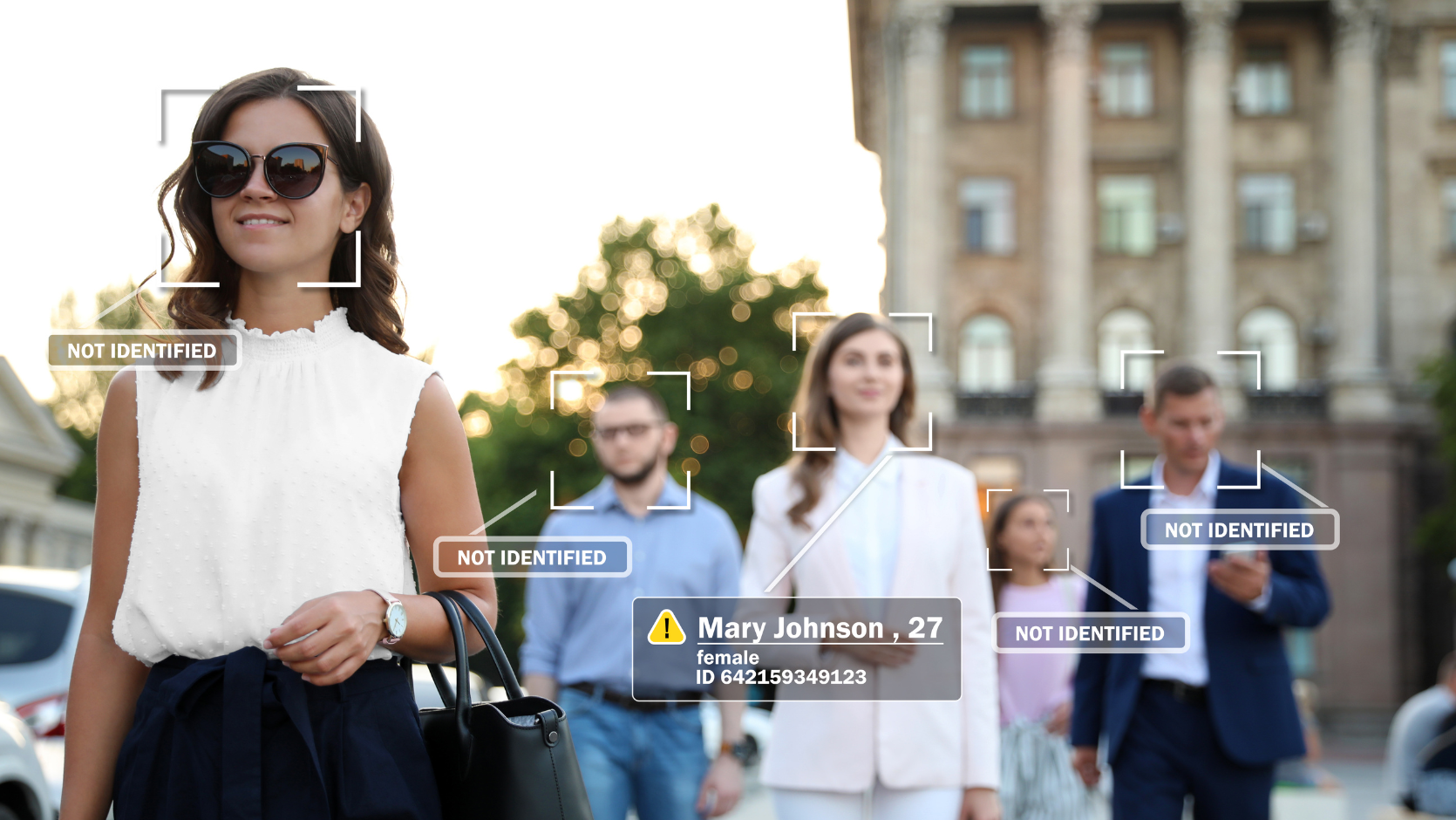

To what extent are rights of individuals threatened by autonomous systems?

A useful start lies in the concept of random numbers and, importantly, understanding that there is no such thing as a “random” number. So-called random number generators are all driven by algorithms which produce the numbers. If you know what the algorithm is, you can predict what the numbers will be.

So the numbers produced by random number generators are not random at all. The point here is that it is not enough to know what an automated system is meant to do; to understand its implications fully it is also necessary to know how the system does what it does.

The broad question posed by Dr Smith’s article is to what extent are the rights of individuals threatened by systems working autonomously, meaning most obviously without human input into the activities they conduct. The question we ask here is whether the law is able to safeguard those rights.

Flaws in Centrelink “robo debt” algorithm result in thousands of baseless demands

While the use in warfare of autonomous weaponised drones, cited by Dr Smith, is a very obvious and dramatic example, at a more mundane level, readers will recall the recent uproar following the penalising by Centrelink of social security recipients who had allegedly been overpaid.

This “crackdown” was managed by an automated system, and presumably the attraction of an automated system was that it was easily and quickly able to launch an enforcement blitz which would have consumed large amounts of the time of human staff.

The system was meant to detect overpayments but, as has just been said in relation to random numbers, the key was how the system went about detecting them.

As is turned out, “robo debt collector” worked largely on averages; could not adequately deal with variations in income; and had trouble distinguishing between gross and net incomes. As a result, repayment demands were issued to around 20,000 welfare recipients who owed little, if indeed anything at all.

Elsewhere, while the US stock market “Flash Crash” of 2010 was triggered by intentional rogue behaviour, that conduct relied on automated trading algorithms which bought and sold; and made and cancelled offers; at lightning speed, meaning that the Dow Jones lost almost 1000 points in under ten minutes before making a partial recovery. But the cost was serious.

Individuals targeted by automated systems forced to collect evidence to prove their innocence

Obviously, if someone claims from you money which in fact you do not owe, the law has well-established processes through which you can resist the claim, as long as you are able to assemble the facts evidencing your position. However, this doesn’t mean that there isn’t a problem.

Looking at the “robo-debt” issue, when we get a very official looking letter, we are conditioned to think that what it says must be right, and that in itself will have unsettled many recipients.

Secondly, people do not necessarily keep the kinds of records which will easily enable them to rebut a baseless claim. And, even if they do have such records, in a practical sense they suffer the disadvantage of needing to “prove innocence”, and possibly suffer financial disadvantage in doing so.

So, while remedies are available after the event, that fact alone does not amount to a complete solution.

Disclosures by government agencies under freedom of information legislation

Where government agencies are concerned, it would be possible to require them to disclose routinely their use of automated systems to make decisions affecting the interests of citizens. Freedom of information legislation throughout Australia (in NSW, the Government Information (Public Access) Act 2009) requires that agencies regularly publish policies and procedures, so it would not seem too difficult for agencies to make public those processes which are using only automation and are beyond human control.

A related approach can be found in privacy legislation (see the Privacy Act 1988 (Cth)), under which government agencies and businesses (other than small businesses) which hold or collect personal information are obliged to disclose the purposes for which they use that information, and to refrain from using it for other purposes without prior notification.

This could be supplemented by a requirement to disclose to affected individuals each instance where some right or interest of theirs would be dealt with by an automated system.

Giving more weight to human challenges of decisions made by machines

A more radical approach, based on this kind of disclosure, might be inspired by the memory of Sir Arthur Kekewich, a Chancery Division judge for England and Wales at the turn of the 19th century.

Sir Arthur had a reputation (to what extent actually deserved is a bit hard to tell at this remove) of being such a poor judge that one counsel is said to have opened his address by saying “This is an appeal against a decision of Mr Justice Kekewich, my Lords, but there are other grounds to which I shall come in due course”.

Drawing on His Honour’s legacy, an approach could be available under which enforcement of any decision made by a machine could be halted when challenged, and proceeded with only when confirmed.

If the onus were on the business or agency to establish the validity of the action, rather than on the individual to disprove it, that might provide some real protection.

What happens when machines absorb our flaws and become autonomous?

Of course, the examples given here are not the truly scary things, like automated military drones which cannot be stopped even when the humans have changed their minds. Other examples which can be viewed as either full of potential or deeply alarming include the creations of science fiction, or computers like IBM Watson, which are not only programmed to answer questions, but can learn in the same way that humans do by reprogramming themselves as a result of experience, then starting to act differently, and perhaps unpredictably.

Recent media attention has been given to the emergence of artificial intelligence which mimics the worst aspects of human nature, rather than the best ones. (For example, see Stephen Buranyi’s Rise of the racist robots – how AI is learning all our worst impulses.)

Believe this sort of thing is still in the future? Think about the predictive text function on your mobile, or the voice recognition system on your computer. All you need to do is to say: “Hey Siri – tell me more about algorithms!”

For more information please see the articles below.

Predicting recidivism – the questionable role of algorithms in the criminal justice system

Guilty or not guilty – could computers replace judges in a court of law?

Inventiveness of ChatGPT poses risk of defamation

AI-generated deepfake images create bullying danger

Can I claim copyright if I write a novel or research paper using generative AI?

Driverless cars are coming – but whose fault will it be when they crash?